Event Scripts in FDMEE

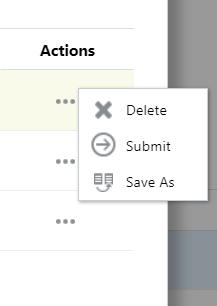

Event Scripts, located in the Script Editor within FDMEE, are used to execute a task when certain events are carried out during the data load process. Each of the Event Scripts are customizable – users can use the scripts to perform a wide variety of tasks, such as send emails, run calculations, or execute a consolidation. There are nine different events and users can run the scripts before or after the event is executed, which makes a total of eighteen Event Scripts. The nine types of events are: Import, Calculate, ProcLogicGrp, ProcMap, Validate, ExportToDat, Load, Consolidate, and Check, with a Bef and Aft for each event. To create an Event Script, Select the type of event, whether the script will be run before or after that event, and click ‘New’.

Here are descriptions of each of the Event Scripts:

BefImport – This script runs before the Location is processed.

AftImport – Runs after the Location is processed and the data table is populated.

BefCalculate – Only for a Validation run and takes place before the Validation.

AftCalculate – Only for a Validation run and takes place after the Validation.

BefProcLogicGrp – Runs before Logic Accounts are processed.

AftProcLogicGrp – Runs after Logic Accounts are processed.

BefProcMap – Called before the mapping process for the data table begins.

AftProcMap – Called after the mapping process for the data table has finished.

BefValidate – Checks if the mapping process has been completed.

AftValidate – Runs after the BefValidate Script.

BefExportToDat – Called before the data file is created for Export.

AftExportToDat – Called after the data file is created for Export.

BefLoad – Called before the data file is loaded to the Target Application.

AftLoad – Called after the data file is loaded to the Target Application.

BefConsolidate – Called when a check rule is included in the location that is being processed. Can only be used if the Target Application is Hyperion Financial Management or Essbase.

AftConsolidate – Runs after the BefConsolidate Script.

BefCheck – Runs before the Check Rule.

AftCheck – Runs after the Check Rule.

One final thing, when creating an Event Script, make sure that there is a Script Folder within the Application Root Folder. Event Scripts are application specific, so they need to be kept in the Script Folder for the corresponding Application’s folder system.

Collected from https://hollandparker.com/blog/2016/04/21/event-scripts-in-fdmee/

Thanks,

Mady